SHARP? SuperChain? Layer-3s? Temporary Rollups? AHHHHHH

Will rollups be deployed as silos? Or form part of a larger SuperSHARP Chain?

As we are all aware, we need more than one chain and certainly more than one rollup, to help scale Ethereum for mass adoption.

Before we dive in — why rollups on top of Ethereum?

Remember — the core innovation that underpins the field of cryptocurrency is trust engineering — our ability to define, measure and minimise trust when interacting with a counterparty.

All systems, no matter how well designed, always rely on an element of trust to protect both safety and liveness. In most cases, it assumes a threshold of parties will honestly follow the defined protocol and some thresholds include N of N parties, an honest majority, or K of N parties.

Interestingly — in the field of trust engineering — the cheeky approach is to introduce a trusted third party (TTP) who can work with one honest party to protect the system. In academic research, many of these papers are often disregarded because it feels like cheating.

In the context of rollups — we do have a trusted third party — the bridge contract running on Ethereum. This is why the approach is so exciting as Ethereum becomes a root of trust that protects all systems deployed on top of it alongside the assistance of the one honest party.

You can read this article for more along this line of thinking, but this is an answer to the why argument for rollups on top of Ethereum. Now we focus on the how and this brings us to a fundamental question for a multi-chain world:

Will rollups be deployed as silos on Ethereum?

Can we deploy a new off-chain system/chain on top of a rollup?

Let’s find out!

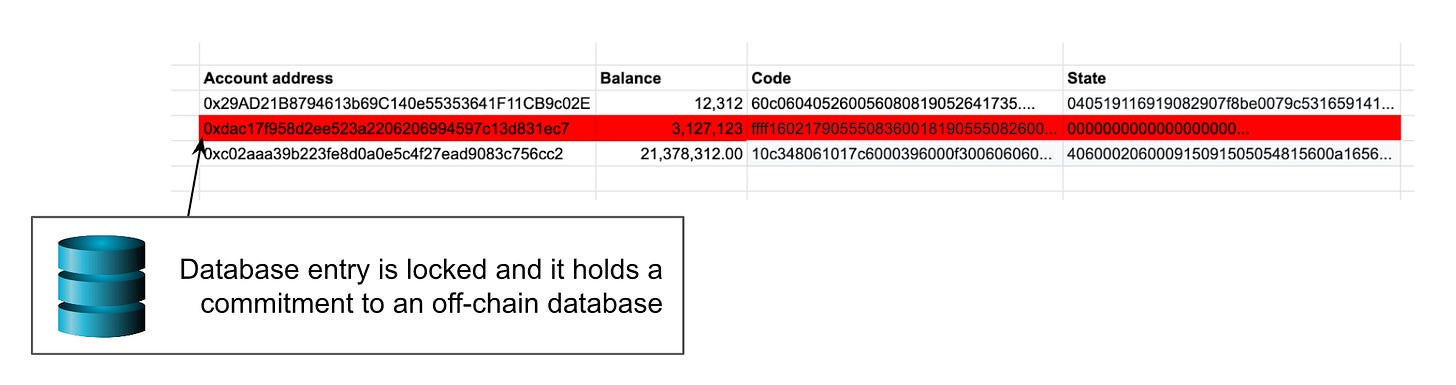

How the Bridge Locks a Database Entry

As a bit of background, there are two components to a rollup:

Off-chain database. It records the liabilities for the rollup including account balances, program state, smart contract code, etc.

On-chain bridge. It holds all the assets and it is responsible for upholding the safety & liveness of the off-chain database.

In a rollup, it is the bridge smart contract and no one else, who has the sole discretion to decide the accepted state for the off-chain database.

Now — the question is — what does this look like at a technical level?

The bridge, just like any smart contract, is locking a single entry/row in the underlying database for its own purpose. It will have a smart contract address, balance, code, and a state. All of it is recorded in a single database row.

So, when we create a new bridge, let’s say from:

Ethereum → Arbitrum,

Ethereum → Optimism,

Ethereum → StarkNet.

The bridge smart contract locks a row in Ethereum’s database and it stores an anchor for the new off-chain database. In practice, this is a cryptographic commitment that represents the off-chain database’s entire state. It is up to the smart contract code to determine what will be the next commitment/accepted state for the off-chain database.

A Land of Siloed Rollup

To date, all rollups are deployed on top of Ethereum and there is a growing list of projects including Arbitrum, Optimism, Scroll, StarkEx, ZkSync, etc.

There are many benefits to deploying your service as a rollup on top of Ethereum including:

Secure access to >$400bn assets,

A user can deposit their coins held on Ethereum into the rollup without loss of security,

Changed computational model,

It makes computation cheap, but data availability expensive — ideal for smart contract deployment and experimentation with new DeFi primitives.

Granularly controlled user experience,

A centralised sequencer can offer a user experience that is on par with trusted cryptocurrency exchanges.

Experimental virtual machines,

The ability to experiment with new features/execution/computation/languages while still inheriting the security of Ethereum.

All rollup deployments to date have empirically demonstrated how the technology stack can be adopted by projects to offer financial services while allowing software (and not humans) to protect billions of dollars.

The main issue with rollup deployments is all the infrastructure overhead that comes with it. Every rollup has its own bridge smart contract suite, sequencers, executors, security councils, RPC node providers, and generally very different approaches on how to decentralise the technology stack.

If we want cryptocurrency services to eventually replace their existing Web2 technology stack with a rollup (aka validating bridges), then there is simply too much overhead.

There must be a better way, right?

Disclaimer: Rollup technology is still in its infancy. You can and should evaluate the risk of each project on L2 Beats. We assume the reader is familiar with the separation of Sequencers and Executors. If not, then check out this article.

A Whole New World on Top of Rollups?

What if we can deploy a bridge to a new off-chain system on top of a rollup and re-use a lot of its existing infrastructure?

Is this possible? And are there benefits to doing so?

Yes!

A rollup is an ideal platform for deploying new bridges due to how it changes the computational model for smart contracts. It becomes significantly cheaper (financially) to execute actions on the bridge and pass messages between different bridges.

Additionally, it leads to an interesting mental model for layers of a blockchain system:

Layer 1 → Data availability layer

Layer 2 → Execution layer

Layer 3 → Off-chain systems

The separation of chains is in line with the modular design approach taken by proof of stake Ethereum.

So, if we assume that new chains will be deployed as layer-3s on top of layer-2 rollup bridges, then what will this look like?

What Will The Off-chain Systems on Top of a Rollup Look Like?

Let’s refresh our memory on what it means to inherit security from Ethereum for a off-chain system:

Data availability. Ethereum guarantees anyone can get a copy of an update for the off-chain database and apply it to their local copy of the database.

Database integrity. Ethereum guarantees that all updates to the off-chain database are valid and released on a timely manner.

In an ideal world, we want the same security goals to apply for an off-chain system that is deployed on top of a rollup.

Stronger Trust Assumptions for Data Availability

The problem of data availability is a real pickle.

There is no discount for posting the data on top of a rollup and the off-chain system will incur approximately the same cost as the underlying rollup. This is because the cost of data availability is ultimately charged by Ethereum.

This leads to an obvious question:

Is it practical, or does it even make sense, to publish the off-chain system’s data on top of a rollup?

It is not necessarily a straight forward answer, but it is not the only option too. There are alternative solutions, albeit with stronger trust assumptions, to ensure the data is publicly available. For example, a project can adopt a data availability committee to attest to the data or they can simply post the data to another blockchain system (like Celestia).

There is also growing evidence that projects who want to deploy an off-chain system are willing to adopt a cheaper data availability approach (with stronger trust assumptions) as long as they can still leverage the underlying rollup to protect database integrity. For example, several companies have adopted StarkNet’s Validium committee and Reddit’s community points is run on Arbitrum Nova.

Regardless, we will not dwell on the problem of data availability. There is a far more interesting discussion to dive into as we discuss the goal of database integrity.

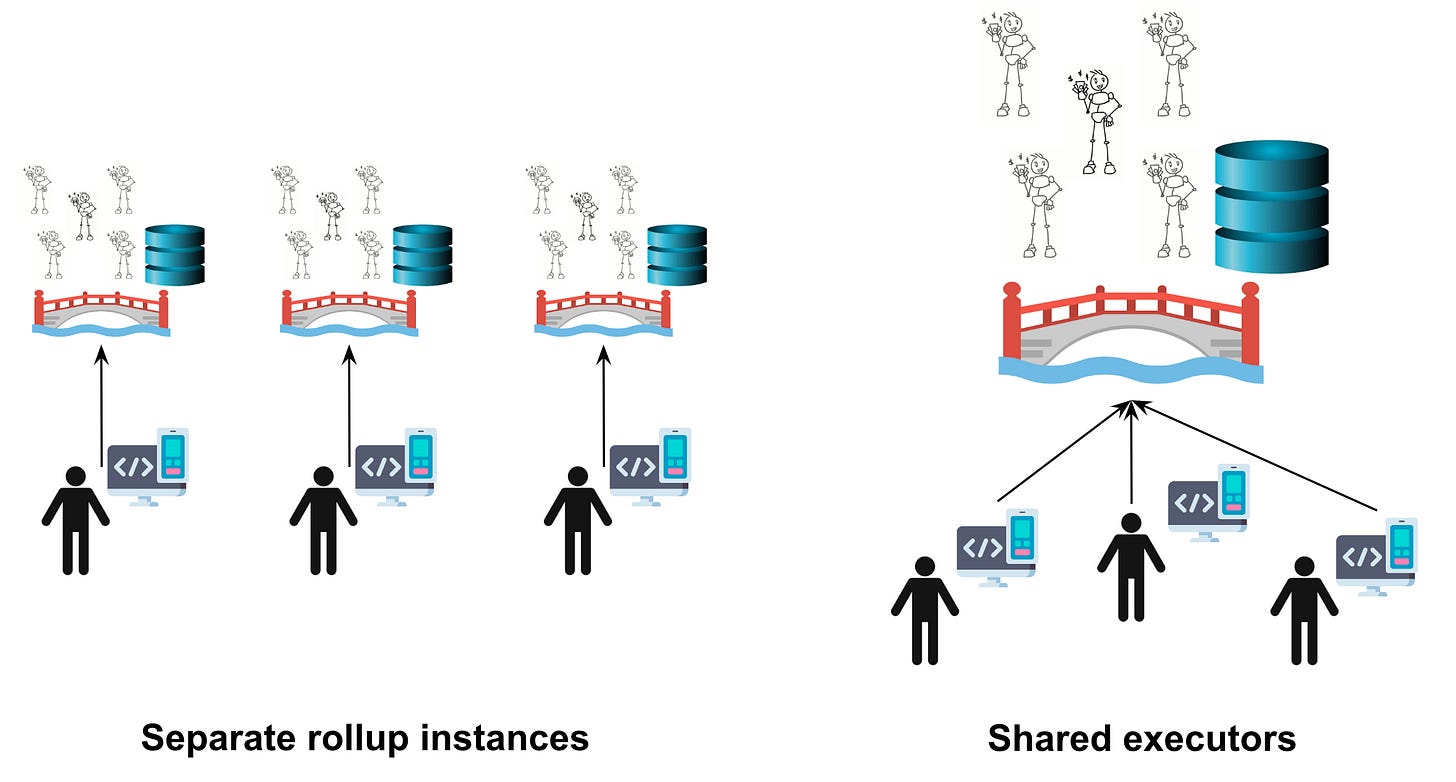

Who Will Be the Executors for the Off-Chain System?

If a project decides to deploy their own off-chain system, then we must assume they want to run the Sequencer and control the privileged role for deciding the order of transaction execution. Otherwise, they might as well deploy the smart contract suite to an existing rollup.

One of the critical design choices for a project is to decide what to do about the executors:

If an off-chain system is deployed on top of a rollup, then is it possible to re-use the same set of executors as the underlying rollup? Or is there a benefit to picking a new set of executors for this off-chain system?

The answer is surprising.

It is typically assumed, at least by me, that the primary role of an executor is to ultimately defend the off-chain system.

After all, we only need one honest executor to pick up the list of pending transactions, execute them, and convince the bridge about the final execution. If there is no honest party, then the off-chain system may halt indefinitely.

However, solely focusing on the one honest party assumption is very likely the wrong mental model for projects who want to deploy their application on top of a rollup. The technology stack should already take care of that problem for them and empower an honest party to step up at any time.

In practice, a project needs to envision the type of computation their off-chain system will require and which set of executors are capable of performing it.

Do they need their own independent set of executors? Or can they pay a fee to use the underlying rollup’s existing set of executors?

Let’s find out! Ahhh!

Project Appoints Independent Executors

An off-chain system can have:

High computational requirements the underlying rollup’ executors cannot facilitate without charging an exceedingly expensive fee,

Proprietary software the underlying rollup’s executors cannot easily run,

Large and ever-growing database to query.

Project can picks its own executors to enable specialised execution as the dedicated executors can perform a crazy quantity of execution in a cost-effective manner.

This type of setup lends the off-chain system to act like an oracle. It can perform the execution and convince the bridge that the execution is correct before the result is passed onto other smart contracts. As such, all dependent smart contracts can have some confidence the result is correct before using it.

It potentially enables a new paradigm for passing messages in a blockchain context and we can call it a convincing oracle. The bridge’s primary role is no longer about protecting locked assets, but checking the validity of all messages sent from the off-chain system.

Some startups like AltLayer have already worked this out — although perhaps not as clearly as explained here — as they enable projects to spawn temporary off-chain systems to perform high loads of burst execution. For example, they have recently supported a large-scale NFT airdrop.

Of course, the off-chain system can be a permanent instance like indexing off-chain data and making it available to on-chain smart contracts or a temporary instance to handle a burst of traffic. Nevertheless, I am super excited to see what applications emerge and adopt this approach in the coming years.

Project Adopts Executors From the Rollup

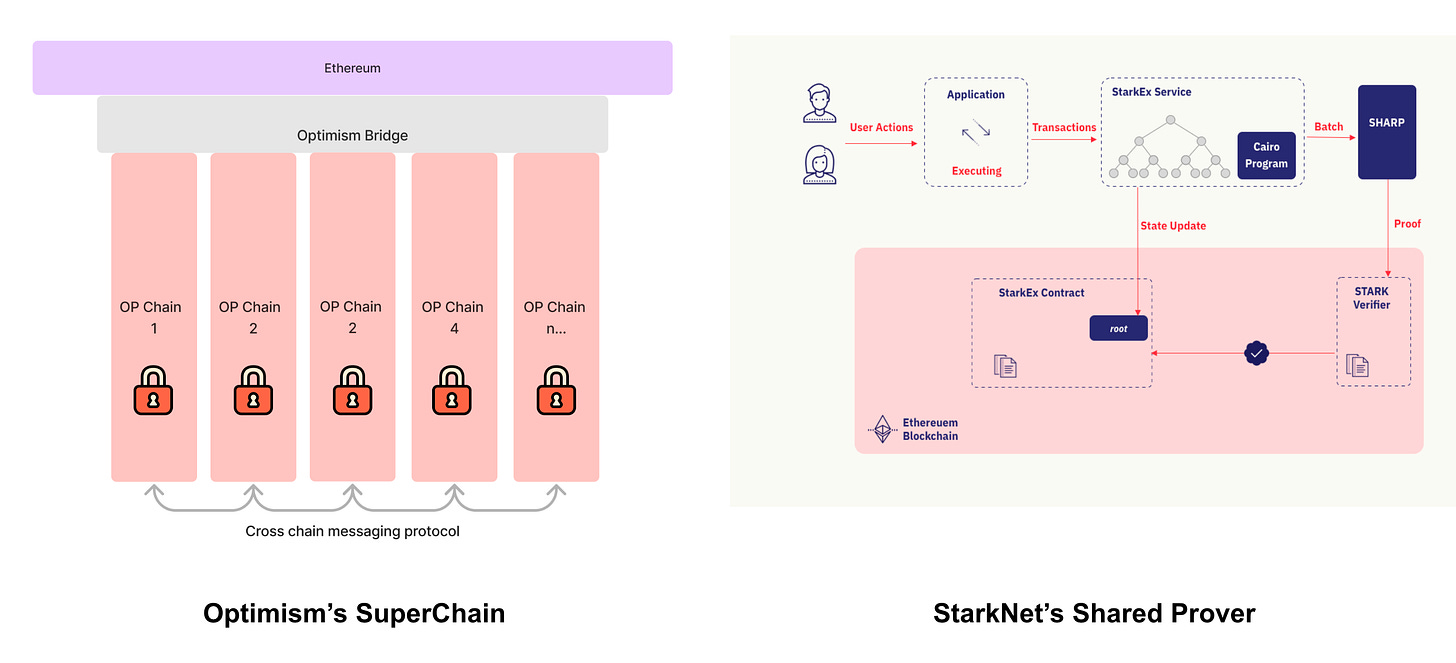

The idea behind StarkNet’s SHARP and Optimism’s SuperChain is to empower projects to deploy a bridge, establish an off-chain database, and run the Sequencer for the off-chain system.

However, and most crucially, the project will only sequence the user’s transactions and leave the final execution up to the underlying rollup’s executors.

Put another way, if the off-chain system’s execution requirements are reasonable and it is cost-effective to rely on the underlying rollup’s executors, then why not just re-use them for it?

There are interesting properties to consider for such a setup:

Off-chain database. Project’s sequencer can still have full control over the sequence of transactions for their off-chain database and it can still logically be a silo.

Automatic execution. The underlying rollup, for a fee, will execute all sequenced transactions from the off-chain system.

Globally sequenced. All transactions, across all off-chain systems, can have a single total ordering.

Synchronisation. The underlying rollup’s sequencers, with the help of Sequencers from the various off-chain systems, can enable atomicity of transaction bundles across different off-chain systems.

The immediate benefit is to allow a project to pass on the hard work of executing transactions. Its only responsibility is to deploy a bridge, its smart contract suite on the new off-chain system, and the necessary infrastructure to run a Sequencer and sequence transactions for their users.

In fact, Rollups-as-a-Service companies may very well offer a one-click deployment solution for the off-chain infrastructure, enabling any Web2 companies to replace their legacy infrastructure with a rollup-like off-chain system.

Additionally, the off-chain system no longer lives in a silo and can access dapps deployed on other off-chain systems. In fact, all off-chain systems who share the same executors will have the same transaction queue and there will be a single total ordering for all transactions across the various systems.

If the Sequencers can cooperate, then atomic transaction bundles can be enabled that either execute in their entirety or do not execute at all. For example, a single bundle can allow the user to transact on system A, move funds from bridge A to bridge B, and then transact on system B.

There are many open research questions on how to achieve atomicity and synchronisation with this layer-3 style setup, but it is indeed within the realm of possibility.

Finally, I’d speculate the real benefit of deploying an off-chain system in this manner is to minimise liability as an operator. The project’s operators only needs to take care of sequencing transactions for their users and the process is very similar to the role of a block producer. Everything else such as custody of funds, censorship-resistance, RPC services, and executing transaction details, can be taken care off for them by the underlying rollup.

p.s. There are interesting cost-savings for data availability too. Transactions can be batched together and only a state-diff is posted on-chain. There is already so much information to take in, so let’s leave that for a future post :)

What Is the Takeaway?

We have tried to envision how projects may deploy a new off-chain system on top of a rollup and in this pursuit there are two questions to answer:

How will they solve the data availability problem? And how are willing to make trade-offs?

Who will take the published transactions and eventually execute them?

We did not spend a significant portion of time on data availability as it is the latter question which peaked our interest to write this blog post.

As someone who is trained in protocol design using applied cryptography, the first and foremost mindset is to pick the executor set which is most likely to have one honest party who can step up to protect it.

But — this is the wrong mindset!

How a project picks the executor set really depends on the new off-chain system’s envisioned usage and the financial costs associated with its execution.

Perhaps to establish an initial rule of thumb:

Convincing oracle. If the off-chain system requires crazy quantities of execution to produce a single result, then it makes sense to have their own executor set and propriety software.

Gateway system. If it is financially affordable for users to rely on the underlying rollup’s executors, then the benefits of synchronisation and minimal liability makes it a clear choice.

I’m sure the rule of thumb will expand, but this is my initial attempt at trying to articulate it. It is also why I find the announcements of StarkNet’s SHARP, Optimism’s SuperChain or even Arbitrum’s Nova are so exciting.

All approaches are making subtle assumptions on how a project will pick its set of executors. In SHARP and SuperChain, it assumes the project will re-use the same set of executors. Whereas in Nova, it allows the project to appoint its own execution committee.

If i had to take a stab at answering that, I suspect the vast majority of projects will pivot towards the gateway model using SHARP or a SuperChain as all the hard work is abstracted away alongside out-of-the-box global access to other off-chain systems.

It is a very attractive setup and may very well be why Coinbase decided to adopt the OPStack. If successful, Coinbase can become a gateway rather than a gatekeeper as startups deploy services on top of their off-chain system.

It will be interesting to see if a centralised gatekeeper on legacy Web2 technology can keep up with the mesh of venture funded startups replicating the same features and empowering the market to decide which service is the best/winner.

I hope you have all enjoyed this article and thanks to Ser Chris Buckland for skimming it. 🚀

> It makes computation cheap, but data availability expensive

Why is data availability expensive?

> L2 Beats

Nitpick: The name is L2 Beat (without the 's' at the end)

Really comprehensive. Regarding the RaaS platforms, it looks it's trending very soon. Some services are already there like https://launchpad-tutorial.altlayer.io/.