Modular Design and the Two Blockchains

Replacing a monolithic codebase

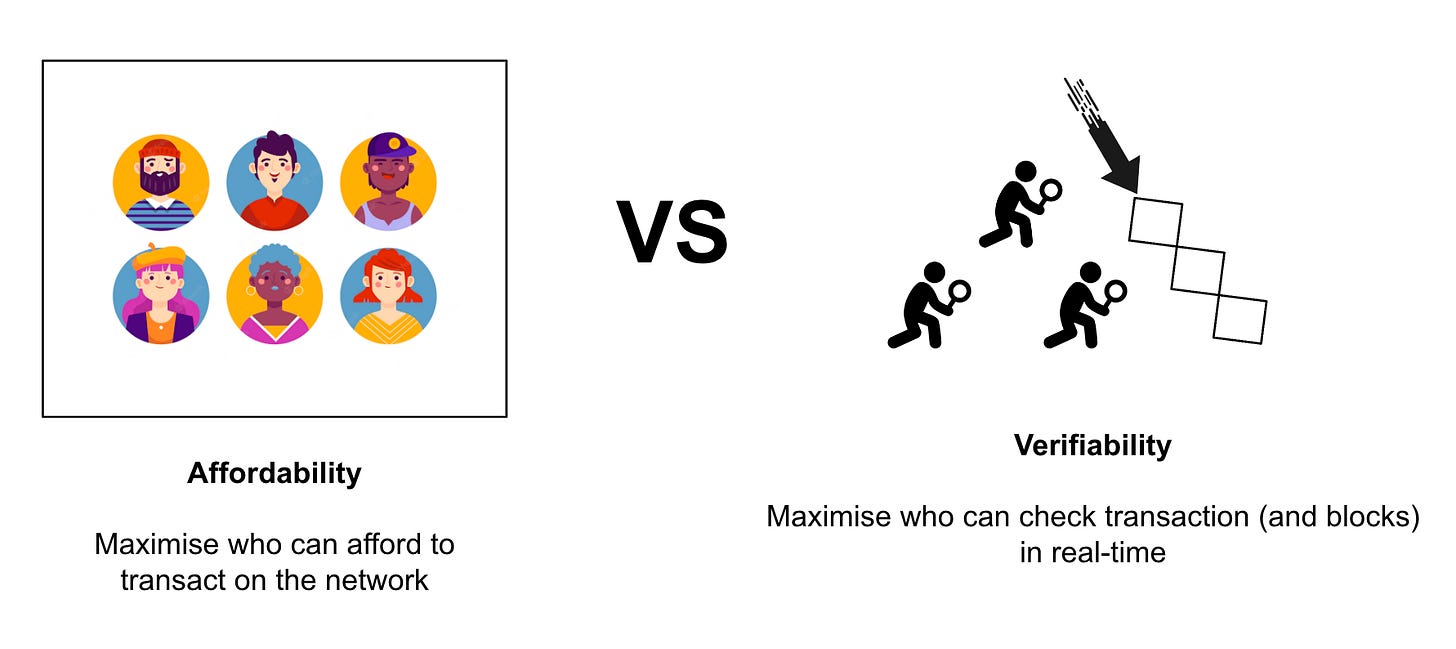

The pursuit of blockchain scalability has led to several ambitious proposals over the past 10 years and at the heart of the problem is a fundamental trade-off:

Affordability. Population of users who can afford to transact on a network.

Verifiability. Population of users with the hardware and bandwidth requirements to check the integrity of transactions in real-time.

Many modern blockchain systems prioritise affordability and maximum number of users above all else — assuming the network operators are willing to put in all the hard work to help realise that goal. This assumption, operators should work hard, pushes a blockchain system towards centralisation as it reduces who can participate as an operator. Especially for networks that require operators to purchase hardware from approved manufacturers.

Not to say modern blockchain designs are wrong, but to date, they have all failed to garner the same traction as Bitcoin or Ethereum. One of the prominent reasons, in our opinion, is due to this fundamental trade-off. It was at the heart of the Bitcoin Block Size wars and the community ultimately decided to prioritise who can verify the network’s integrity over its long-term affordability.

The hope, just like in Ethereum, is that affordability can be pushed to another layer via the lightning network, a sidechain, or a rollup. The Ethereum community, roadmap, and ethos descends from the same background. The only difference is that Ethereum has an ingrained social contract that enables its community to actually change the underlying platform in the pursuit of overcoming this dilemma.

If there is a single takeaway, that is unrelated to proof of stake, then remember that the TPS metric is meaningless in the context of a decentralised network. Scalability is not just about increasing throughput at all costs and instead it should be defined as the following:

Increasing the transaction throughput while still adhering to the same set of compute, bandwidth and storage requirements to run a fully validating node.

I’m sure as a reader this discussion may appear as strange. Why are we talking about blockchain scalability? And how is this relevant to proof of stake? Even more so, as the upgrade does not immediately increase transaction throughput in a meaningful manner. It is because the upgrade has an under-appreciated feat of laying the groundwork to support future scalability solutions.

Monolithic to Modular

Let’s fast-forward a few years from the block size wars. Today, we now have better terminology to illustrate how this dilemma impacts the design of a blockchain system which can be best described as a monolithic blockchain or a modular design.

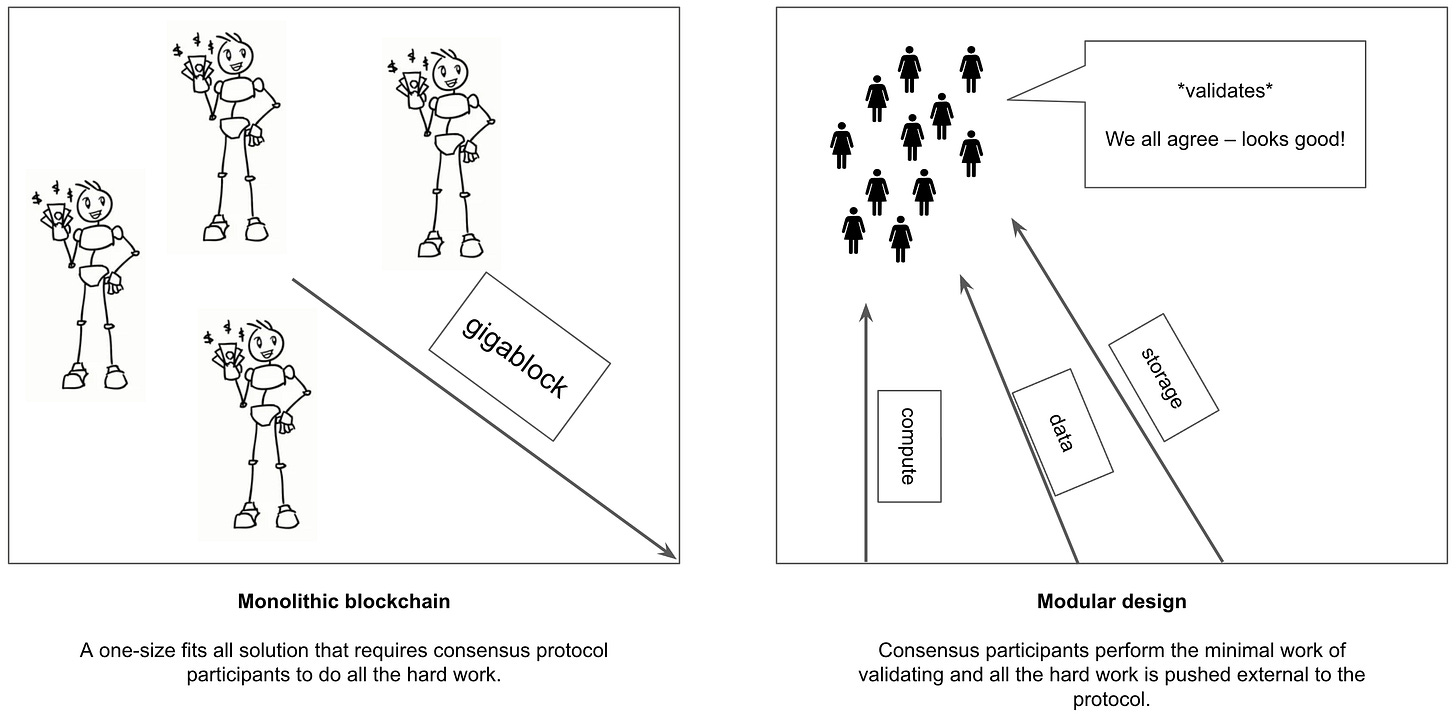

The Monolithic Blockchain. It is blockchain protocol and implementation which tries to find a one-sized fits all solution to scale all hardware bottlenecks such as how to execute transactions, how data is propagated, and how the database is stored. The network operators are forced to perform all the hard work and run the entire monolithic implementation — ultimately restricting who can participate in the consensus protocol.

Resources become layers. A large force behind the design of proof of stake Ethereum and its long-term roadmap is to define each resource as a new layer. By being a layer, the problems can be separated and self-contained, allowing teams to build a new software client to solve each specific problem. This is called the modular blockchain design

Arguably the most significant insight for the modular approach is how it has changed the perception of consensus participants. It is no longer about burdening them with the maximum workload. Instead it focuses on:

What is the minimum work a block proposer can do? And is it possible to push all the unnecessary hard work elsewhere?

The answer is yes.

Validators as light clients. Designing a blockchain protocol is no longer about maximising the work of network operators. In fact, it is the opposite, the network operators (Validators) should be light clients who simply check the hard work was performed elsewhere.

It is why I personally like the name Validator. Their only job is to validate, reach agreement (consensus) and in the end protect all user assets recorded in the Ethereum database. Long term, it should only require commercially available hardware and a good internet connection to participate.

Additionally, by making Validators light-clients, it may finally provide a solution to the altruistic nature of running node software. Users can finally be paid to validate the blockchain and check its integrity in real-time. Plus, we can check they are actually doing this job too.

The Two Blockchains

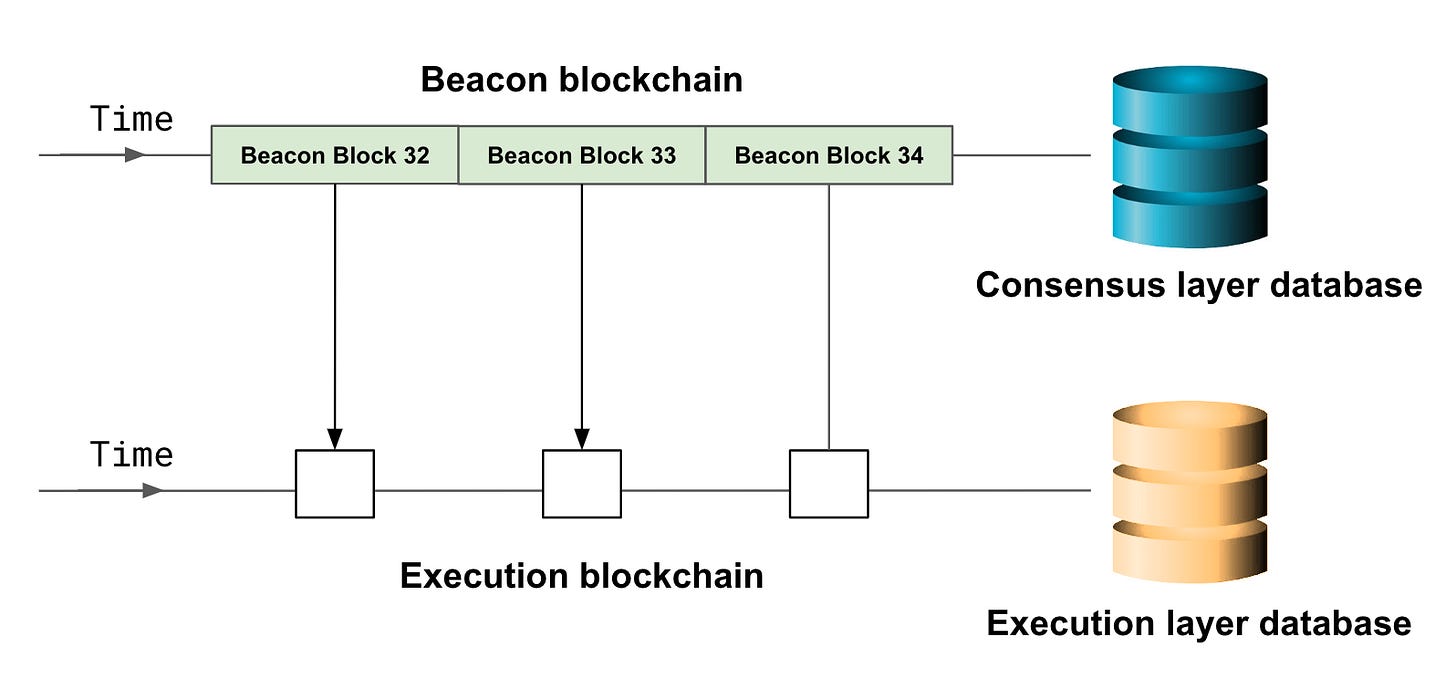

The Merge represents the first milestone towards moving the Ethereum specification towards a modular design.

It separated global consensus on the ordering of transactions and the final execution of the transactions. Alongside that, it introduced two new blockchains:

Execution blockchain. The original Ethereum blockchain that processes user-generated transactions and smart contract execution. It is sometimes called the ETH1 blockchain.

Consensus blockchain. A blockchain dedicated to the consensus layer. It is responsible for deciding the canonical chain of the execution blockchain and recording the proof of stake transcript. It is sometimes called the beacon blockchain.

Two software clients. The proof of work module, and generally any code that handles deciding what is the canonical chain, can be removed from the original Ethereum node software. The entire execution environment remains intact and it is now called an execution client. In order to retrieve the canonical execution chain, it polls a software client that handles the consensus layer (“consensus client”) for new blocks.

Since the execution blockchain and clients mostly remains intact, our articles will focus on the consensus layer which implements the proof of stake protocol.

Multi-client ecosystem. Thanks to the above separation, several teams have emerged who are independently trying to solve the problem of consensus or execution. Of course, in both situations, they must follow a universal specification but are free to experiment with the implementation details. There is a thriving (and surprisingly well-funded) multi-client ecosystem. For example, consensus clients include Teku and Prysm, and execution clients such as Geth, Erigon and Nethermind.

Validators are encouraged to run a different combination of consensus and execution clients. It is the first line of defence to fend against consensus-level bugs as hopefully the same bug will not be replicated across multiple independent implementations. This software engineering practice is called N-version programming.

If a supermajority of Validators do not follow this advice, then it can lead to a dooms day scenario. We’ll find out more about this shortly.

A final note

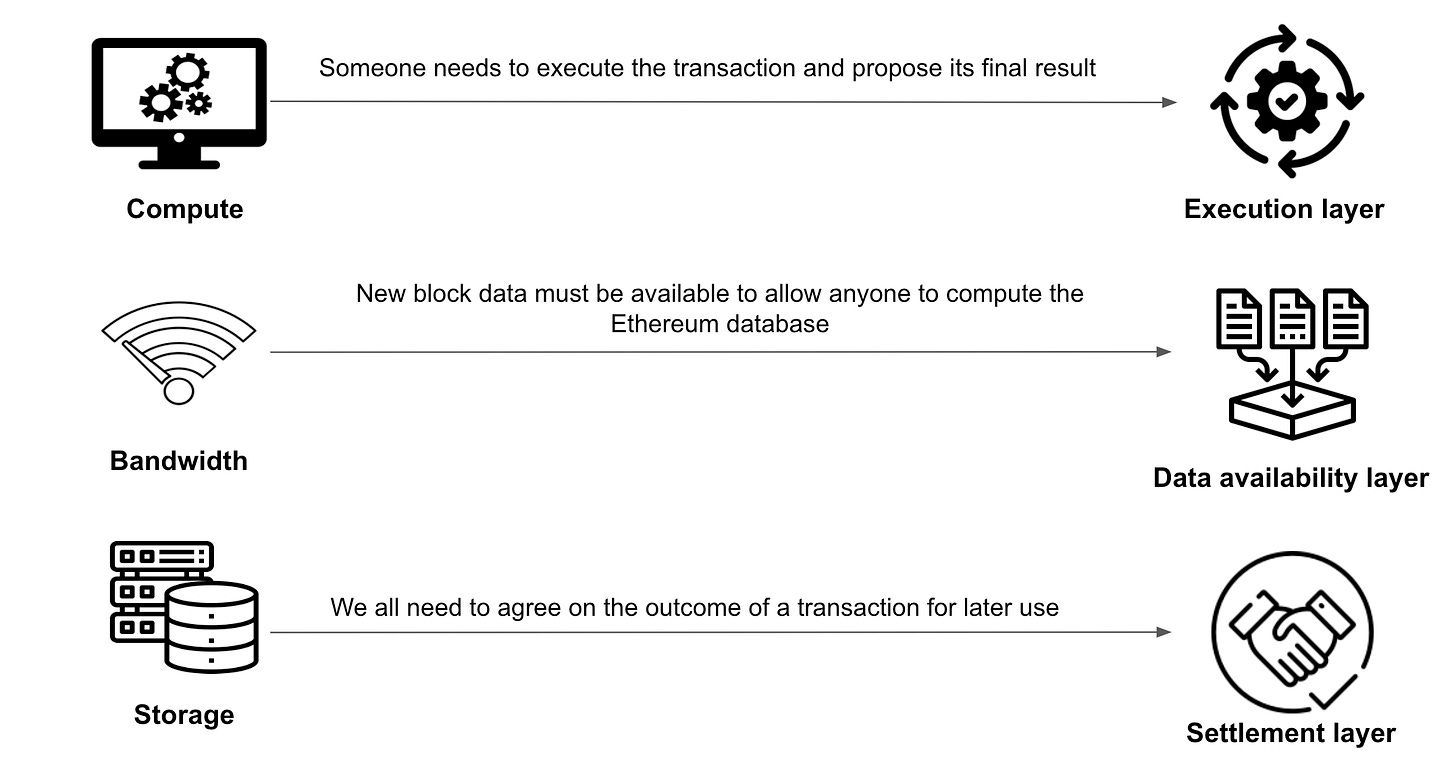

A keen reader may have noticed the usage of ‘data availability, execution, and settlement’ layers, but proof of stake ethereum has only laid the foundations for a consensus and execution layer.

The long-term roadmap is working towards:

Consensus → Data Availability. All Validators are reaching agreement on the ordering of data and proto-danksharding helps cement that role.

Rollups → Execution layers. Rollups taking on the role of execution layers,

ETH1 → Settlement layer. The original Ethereum blockchain is the root of trust that protects all user defined assets.

The naming can be confusing and relies on future iterations of the Ethereum protocol. We simply stick to consensus and execution layers for now — as it is implemented and deployed.

Very insightful article, for example, I completely agree with the ceteris paribus definition of scalability " Scalability is not just about increasing throughput at all costs and instead it should be defined as the following: Increasing the transaction throughput while still adhering to the same set of compute, bandwidth and storage requirements to run a fully validating node." The scalability improvement needs to be a Pareto improvement. Or, in the case that Pareto improvement is not possible, we at least must document the opportunity cost of improving scalability, i.e. the trade-off between affordability and verifiability. What is the verifiability that we sacrifice in order to improve one unit of affordability?