Deconstructing Rollups

What really defines the rollup? The community or a validating bridge?

A very interesting talk by Kelvin Fichter argues that zk rollups do not exist and how rollups actually work. Let’s take a fun snippet from it:

Kelvin (paraphrasing): You are happy, on Ethereum, with some money. Then, this scary bridge smart contract, its also on Ethereum, it gets you across to Optimism land. You send your money to the bridge, num num num, it spits the money out onto Optimism.

We know the Sequencer can be malicious, so we need to turn the bridge contract into a security guard and some ability to protect the rollup. We add some proofs, like fault proofs which is like giving them an AK-47, but then everyone knows that zk rollups are better than optimistic rollups because the marketing team said so. It is like giving it another AK-47, but its gold plated.

Now we are doubly protecting the rollup. No one is getting past the bridge contract with two AK-47s and we know this is how the bridge contract protects the rollup right?

Wrong. Every single thing here is wrong. If you think this is basically how rollups work, you are wrong.

It is a fun talk to watch, but let’s summarise his arguments:

The development community is too focused on defining a rollup through the lens of a validating bridge.

If other rollup projects are not too careful, they’ll ultimately constrain and hamper what their rollup can do. Just like his experience with building Optimism’s OVM V1.0 that was later abandoned (~October 2021).

Rollups should be built first with the rules enforced by a community and only afterwards we should focus on building a validating bridge for it.

It has motivated me to pursue a short rollup deconstruction and help explore the thoughts in his presentation. Some of the discussion includes:

What are the basic components of a rollup?

How can we think of ‘write permissions” for transacting on a rollup?

What are the fundamental trust assumptions when transacting on a rollup?

How can a rollup send and receive messages to other systems?

What does it mean to bridge assets onto a rollup?

Hopefully, we will land at the same arguments made by Kelvin and work out what comes first — the rollup or the validating bridge?

Computing the Database

The only purpose of a rollup, and a blockchain in general, is to allow all parties to compute the same consistent view of a database. If we can all view, and write to, the same database, then it provides a platform for us to transact with each other and interact with programmable smart contracts without a trusted intermediary.

There are two basic ingredients for building an open database:

Rule set (state transition function): All parties must agree to the same rules on how to parse updates to the database (“data blobs”).

Data availability layer: A public bulletin board that takes the data blobs, decides their ordering, and publishes the total ordering of data blobs for anyone to download/read.

Together, it allows anyone to gain access to the same data blobs, parse the blobs according to the same rules, and compute the same database.

The next step is to decide who can publish a data blob.

We need to define a user group. It can be a fixed set of parties, parties with certain attributes, or it can be fully open (777). The write permissions must be implemented at both the data availability layer and in the rule set to allow a user to publish a data blob for all other parties to pick up.

In practice, the DA-layer implements an open policy and regulates who can transact using a transaction fee market. As long as the user is willing to pay a transaction fee, then they can post a data blob. Additionally, the DA-layer can record the details like an Ethereum address and block number, that can be used by the rule set to decide priority for processing the data blobs.

For example, if the Sequencer publishes a data blob, then the rule set can verify it was sent by them and prioritise their data blobs before all other data blobs.

Fundamental Security Assumptions

The separation of data availability and applying the rule set brings us to the fundamental security assumptions for a rollup.

Who is running the data availability layer? A rollup assumes that a data availability layer is a separate entity, it is readily available for use and trustworthy as a platform to post data blobs.

Operators for the data availability layer have the power to view, re-order or drop published blobs that may be used by the rollup. The operators participate in a consensus protocol and in nearly all blockchain systems the consensus protocol assumes that a majority of the participants will honestly follow the protocol (“honest majority assumption”). For example, the operators can be miners in PoW Bitcoin, stakers in PoS Ethereum, or a new blockchain system set up to provide this exact service (like Celestia).

Assuming the trust assumption is not violated, then the rollup can safely assume the total ordering of data blobs will be finalised and remain final.

How do we all agree on the rule set? A rule set is a specification on how the data blobs should be parsed. It should be agreed by all users and implemented in a software client. If we assume users agree upon a single rule set, then they can ascribe value to the rule set and ultimately to a single database. The value may be financial in nature or simply for support another use-case (like an oracle).

If we want to change the rules, then a governance mechanism is required to enable a group of stakeholders to reach agreement on it. There are many governance mechanisms that a rollup can implement. For example, they can rely on fuzzy consensus like in Bitcoin, transparent SnapShot voting, or simply encode the rules in the DA-layer for eternity.

If a sub-community emerges that wants to modify the rule set, and not all parties agree, then the sub-community can peacefully change the rules and create a new fork of the blockchain. We have already witnessed forks like BTC/BCH and ETH/ETC. In both cases, the market continues to ascribe value to the different rule sets and communities have formed around it.

To summarise. The security of a rollup relies on two sets of parties:

We need to trust the operators who run the data availability layer,

We need to trust that a community will agree on the same rule set and ascribe real-world value to it.

We typically assume the data availability layer “comes for free in the security analysis” and as a result projects mostly focus on how the rule set can be defined/enforced.

Message Passing to Other Systems

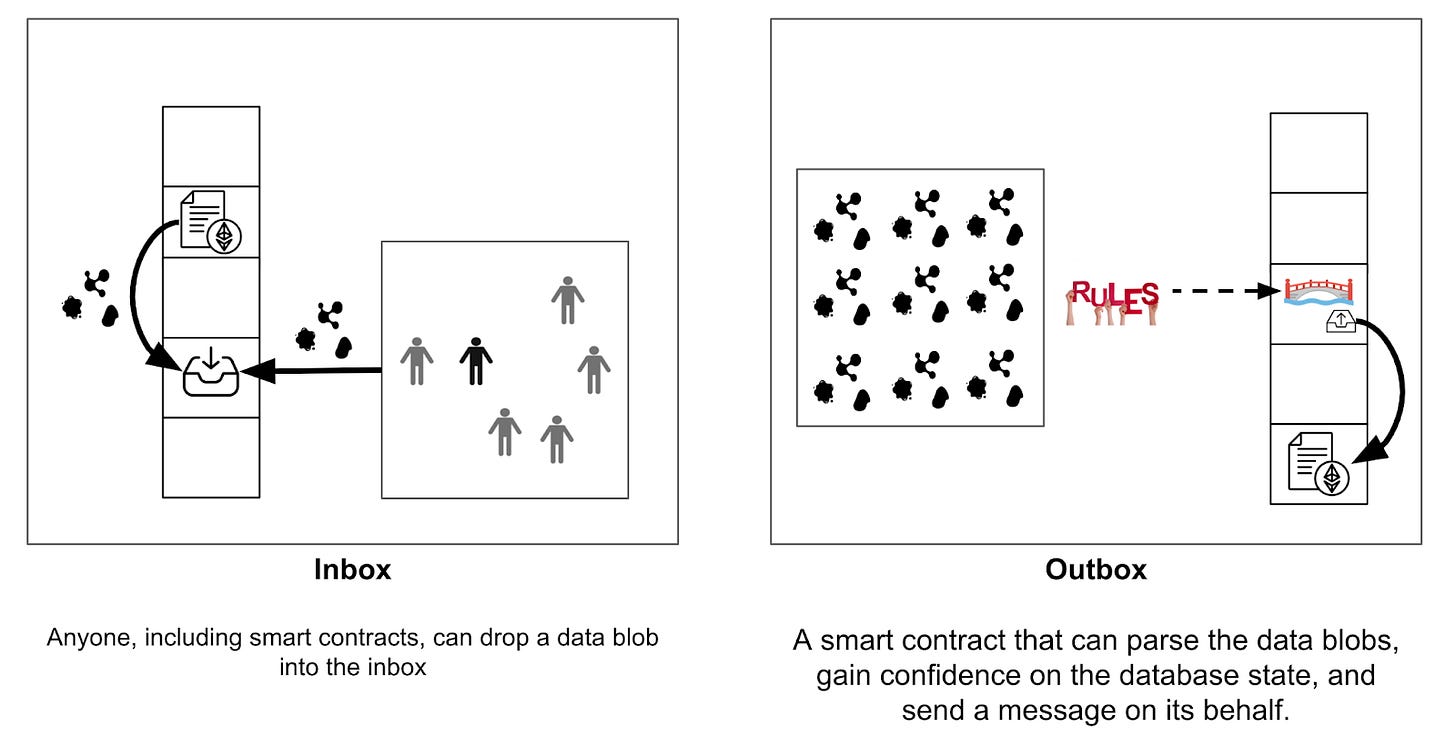

Unfortunately, so far, the rollup lives in a silo. This brings us to the topic of bridge engineering and how to enable communication with other systems. We need two components, an inbox for the rollup, and an outbox for the rollup, to allow a bridge to connect the rollup to other systems. We dive into each component in more detail below.

The inbox accepts data blobs from users. It is a single location where users can find the data blobs. A useful feature for the inbox is to record the sender’s identity alongside a time stamp. It can be implemented as a smart contract with an “accept blob” function that takes a data blob, the sender’s identity, and the current block number.

For example, if Uniswap wants to send a message to a rollup, then it can call the function on the inbox smart contract that accepts data blobs. All users can find the data blob alongside the time stamp, parse it according to the rule set, and verify that Uniswap sent a message for use in the rollup.

The outbox takes messages from the rollup and passes it to other systems. It is implemented as a smart contract and it must have a mechanism to gain confidence about the rollup’s database state before passing on a message.

So, the focus for the outbox is not necessarily how it sends the message, but how it gains confidence the message is valid before sending it. There are two approaches:

Trusted authorities. One, or multiple authorities, attest to the database state.

Light client. A smart contract, with an external/human assistant, has the capability to independently verify the database state.

There is a subtle detail we need to discuss about light clients before moving on. The purpose of a light client is to read the content of a database. More often than not, a light client protocol will verify what decision was made by a consensus protocol, but it will not check that the decision was correct. For example, a light client to verify proof of proof of work (NiPoPow) only reduces the computation required to check the total weight for a PoW blockchain fork, but it doesn’t check that the decision is right. As a result, this class of light clients is still ultimately relying upon one or multiple authorities to attest to the decision.

In the context of rollups, and our article, a light client should verify that the decision by the external authority is correct. This can only be achieved by implementing a mechanism that empowers the bridge to independently check the computational integrity that underpins the authority’s decision. We know how to implement such a mechanism with a fault proof or validity proof system.

In most cases, a light client outbox requires access to all data blobs and we normally assume it shares the same data availability layer as the inbox. There is interesting work, like Celestia’s Light Clients, focusing on how to transport data blobs from one DA-layer to another DA-layer, enabling outboxes for a rollup to be implemented on different blockchains networks.

Separating the inbox and outbox. We often assume the inbox and the outbox are intertwined inside the bridge. This is not the case. The inbox can be a separate entity and the bridge should access it, like any other smart contract, when sending data blobs into the rollup.

Bridge engineering is about the outbox and how it gains confidence about the rollup’s database. Again, they may rely on attestations from authorities or a light client to check its validity. We call the latter a validating bridge. Other smart contracts and projects can evaluate how the bridge is implemented and opt-in to trust messages that originate from it.

Representing and Protecting Assets

Now, just like any cryptocurrency system, it is essential to represent assets on the rollup database and enable the transfer of ownership. There are two approaches for bringing assets into the rollup, and generally speaking, any blockchain system.

Native issuance is when an asset is minted/issued/created on the rollup database. For example an ERC20 contract is deployed on the rollup and it airdrops tokens to a set of users. It is the simplest case as we only need to consider the security of the rollup and the set of parties with influence to change the rule set for parsing the data blobs. We should highlight that there is already precedent for a community to change the rule set and modify the balances of natively issued assets. For example, Ethereum reverted The DAO hack in 2016 and the Steem community rebranded the project as Hive with a modified ruleset that removed Justin Sun’s access to his hive tokens.

Bridged assets require the asset to be locked with a bridge operator on a different system and for the operator to issue the same quantity of assets on the rollup. The assets issued by the operator represent a potential claim to the underlying locked collateral. If a user wants to withdraw the assets back to the origin blockchain, then the user can “burn the coins” on the rollup as a signal for the bridge operator to then return the underlying collateral back to the user on the origin system.

If the assets are bridged, then it is no longer up to the community of the rollup to decide which rule set will protect the assets. The bridge operator, who has custody of the funds and charged with protecting it, has the sole discretion to pick which rules to follow. If the community changes the rules, like in the case of Steem, then the bridge operator can simply ignore the modified rules and refuse to honour withdrawals.

We need to consider who is the bridge operator. If the bridge relies on trusted authorities to attest to the database state, then the authorities pick the rules. For example, stablecoin issuers like USDT will confirm which rule set they plan to follow if there is a proposed upgrade on Ethereum. On the other hand, if the bridge relies on a light client to validate the rules, then the rules are enshrined in the smart contract and it is up to the explicit upgrade process to change the smart contract code.

What Can We Learn From This?

Going back to Kelvin’s argument — should we focus more on the rollup over the validating bridge?

Before we dive in, the debate has little to do with the DA-layer. Thanks to the idea of a modular roadmap, the DA-layer is a separate beast and we can simply assume it is trustworthy alongside any smart contracts deployed on top of it.

The debate comes down to who defines the rule set for parsing the data blobs and the power struggle on how the rule set is ascribed value.

If all assets are natively minted on the rollup and there are no bridges, then the power struggle focuses on how the community collectively agrees to the rule set. It is not dissimilar to how a blockchain system like BTC/ETH is considered valuable. The best examples for a “silo” rollup includes MasterCoin or ColoredCoins that lived on Bitcoin a very long time ago.

If there are bridges, then the power struggle really comes down to how much influence the bridge operator can wield relative to the wider community for deciding which rule set is valuable. After all, if the community decides to change the rules, then the bridge operator can simply ignore it and only permit withdrawals according to the old rules. If the bridge holds 90% of all issued assets, then its sway may dominate over all other opinions.

Note, the bridge operator does not necessarily need to be human, it may very well be a validating bridge smart contract.

This brings us to the debate about whether the definition for a rollup should be viewed through the lens of a validating bridge or if it is ultimately up to the community.

We don’t think it is a one, or the other, but both. The reason we believe this to be true is thanks to Kelvin’s insight about how to separate the inbox and the outbox. The inbox has little to do with enforcing the rules and it should simply be a central repository for collecting data blobs.

Since a bridge, and an outbox, is simply reading the database and dropping messages into a globally shared inbox — then this implies that there can be more than one bridge. In fact, we can easily imagine a world of many bridges with various trust assumptions. Even more, there can be multiple validating bridges, who are protecting separate baskets of assets according to their enshrined rules for the rollup.

Now — not everything is rosey in a world of multiple validating bridges — as there are practical issues that arise with the fragmentation of assets. Every bridge is responsible for protecting the locked up collateral and issuing its own liabilities on the rollup. If there are competing bridges, especially validating bridges, then we may end up with several variants of the same asset like aETH, bETH, cETH, etc.

So far, from our perspective, the are several reasons why users have flocked to rely on a single validating bridge and typically assumes it is a single smart contract that defines the rollup:

Avoid fragmentation of assets on the rollup,

Inherit validation of rule set from parent blockchain (1 honest party assumption),

Network effects as all other projects trust the same outbox.

Most importantly, and not to be overlooked, the primary reason why everyone looks at a rollup through the lens of a bridge is because only a single implementation is available for every rollup and there is no competition. The lack of competitive validating bridges is because building one is a mammoth and non-trivial task.

This brings us to another key part of Kelvin’s talk.

How should we approach building a validating bridge? And are there gotchas to consider?

According to him, we can hinder a rollup’s full potential if we focus on building the rollup through the lens of a validating bridge, as we are always trying to fit the rollup within the constraints of the bridge and this can lead to a lack of good abstractions for dealing with hard problems. It is the exact issue that led to the deprecation of OVM v1 hit around October 2021 and zkEVMs may run into the same issues shortly.

On the other hand, if we only focus on building a rollup and not consider issues that may arise with a validating bridge, then it is very well possible to slip up with features that are very difficult to implement as a light client smart contract. A good example is the old and very janky PoW algorithm for Ethereum. It was not light client friendly and led to academic papers attempting to make it work.

So, perhaps like everything, both arguments are true to some extent. We should focus on building a rollup, but keep in mind to use primitives that will be light client friendly. It may also help to test it against different validating bridge implementations. This leads to the multi prover architecture by Vitalik and the multi verifier architecture described by Toghul. I suspect the zkEVMs may attempt this type of architecture, but ultimately fail due to nitty gritty details that were chosen to make it work with their specific light client implementation.

To conclude. The key takeaway is the disentanglement of the inbox and the outbox. An outbox is a read-only bridge that can pass on messages for the rollup. With a little bit of extra implementation, it can hold assets and drop messages into the inbox. I’m excited to see how this new mental model will impact the design of rollups and the implementation of validating bridges.

Hopefully this has helped you appreciate Kelvin’s talk a bit more alongside some of the insights I gleaned from it. It was very informative indeed frens!