An overview of the peer to peer network

Put another way, how can we guarantee that any party can get a copy of the blockchain?

There is a single problem at the heart of cryptocurrency:

Data availability problem. Data about the blockchain is publicly available,

The data allows anyone to compute a copy of the database and check it is the same as all other databases. It may sound like a strange problem, but access to data is essential to protect the system’s liveness. For example, if a block proposer cannot get a copy of the blockchain (and database), then they cannot perform their role and propose a new block.

It is easy to shrug the problem of data availability as theoretical and not important. But we have already witnessed data availability problems as Ripple lost the first 32,570 ledgers (blocks) due to an issue with replication. At the same time, the local policy of Bitcoin nodes has been abused to discourage the propagation of valid transactions which is a related issue to defend against censorship.

The answer for solving data availability is straight-forward: information propagate via a gossip protocol and it is implemented as a peer to peer network. The bare-metal minimum guarantee provided by a gossip protocol:

Recent data is propagated and published to all online peers at the time of its release.

This insight is the foundation for the future Ethereum roadmap. While the peer to peer network has historically provided extensive functionality including the ability to download the entire blockchain and a copy of the database, at its heart it only needs to make the recent transactions and block data publicly available for all to download.

For the rest of this article, we will cover more details about:

Who is a peer?

What is the network’s topology?

How does a peer find other peers to connect with?

How are blocks and transactions propagated?

Type of nodes and synchronisation?

Who is a peer? What is their duty?

A peer is a node on the network and it is an altruistic duty.

There is no easy method to monetize the functionality or for the network to issue a reward. Nodes are run by organisations who require the data for business purposes or community members who have a stake in the long-term success of the network. For example, an organisation may run a node to ensure its the data presented to a user is both fresh and accurate.

Nodes on the network are responsible for supporting the following functionality:

Availability of slots and connections. Allow others to run nodes, connect to the network, and find information about the cryptocurrency.

Propagate information. Pass on useful information including connection details of other peers and pending transactions.

Serve the blockchain. Allow anyone to retrieve a copy of the blockchain and a snapshot of the database.

What is the topology? Is it really a mesh network?

We don’t really know the network’s topology, weird right?

There has been some work in the past, namely AddressProbe and TxProbe, to paint a picture of the network’s topology.

AddressProbe study. In 2014, we can see the presence of a mesh network as mining pools and cryptocurrency exchanges maintained wide-spread connections. The average degree of a node was ~8 (active connections) which is consistent with the default implementation of Bitcoin core at the time. The topology was not random and there was a bias on how nodes connected with each other. Authors of the study suggested the DNS seeds played a role in this bias.

TxProbe study. In 2018, we did not learn new information about the public mainnet, but the authors did run the probe for Bitcoin’s public test. The method to compute the topology relied on abusing a bug with orphan transactions and it was fixed here.

This type of research raises a series of interesting questions:

Should the network’s topology be public?

Should the community be able to monitor the network’s connections in real-time?

Should we measure the connections to identify how decentralised it truly is?

Developers have argued that the topology should remain hidden to avoid assisting an attacker splitting and eclipsing a node away from the peer to peer network (eclipse attack).

While the developers only represent a single (and influential) opinion and it doesn’t end the debate, their answer does highlight a core security assumption:

1-honest party assumption. As long as a node can connect to one honest peer, it’ll always find the longest and heaviest blockchain.

How does your node find peers to connect with?

There are two phases for a node to connect to the peer to peer network:

Trusted bootstrapping. A trusted setup to find the first set of peers to connect with.

Advertisement on the network. Record connection information for new peers as it is passed along the peer to peer network.

IRC to DNS seeds

Back in 2010, a new node on the network connected to a well-known IRC channel to fetch a list of active peers. However, as discussed here, IRC was a central point of failure as active peers were forcefully disconnected from the channel.

It was replaced with a several DNS seed operated by well-known and trust members of the community alongside some hard-coded IP addresses which acted as a last resort. An example implementation of a seeder for Bitcoin demonstrates that the seed will rank peers found on the network and return a random sample back to the requester. In Ethereum, the DNS seeds in go-ethereum are run by the Ethereum Foundation. To the best of my knowledge, there is no rigorous work studying the implementation of DNS seeds.

Advertise your node

A node will periodically advertise its connection details to other peers and the information includes:

Connection information. The IP address and port.

Services (version). The capabilities this node can provide to the network.

Timestamp. The freshness of this advertisement message.

The node sends an addr message to its connected peers and a subset of the peers will forward it to their connected peers. Eventually, most peers on the network will receive a copy of it.

On the other hand, if a node wants to find new peers, it can sample addresses with its connected peers using getaddr. The connected peer returns up to 1,000 addresses with an addr message. You can read more about it here.

Incoming and outgoing connections

All nodes maintain a set of connections:

Incoming connections. Other nodes have opened a connections with this node.

Outgoing connections. This node has opened connections with other nodes.

It is crucial that incoming connection slots are available on the network as it provides capacity for new peers to join the network.

Homework: Based on the total number of observable nodes and the default setting for incoming connections-- what is the total estimated connection slots available?

Based on up to date documentation, the default setting is to establish 11 outgoing connections with distinct roles:

Full relay connections (8). All transactions, blocks and other messages are relayed.

Block relay only connections (2). Only blocks are relayed in this connection to help hide the network’s topology.

Feeler connection (1). A short-lived connection to test whether locally kept addresses are still connectable.

The motivation for outgoing connections is to avoid an attacker from clogging up all incoming connections and eclipsing the node from the peer to peer network. This makes the policy for deciding how to open an outgoing connection important as it is essential to protecting a node’s view of the blockchain.

Advertising Pending Transactions

All nodes are equal on the peer to peer network and there is no in-protocol mechanism for identifying a block proposer. If a user wants to send a transaction to a block proposer, then they must send it across the peer to peer network with the expectation that it will reach a block proposer. At the same time, if a block proposer wants to propagate a new block to the other block proposers, then it must also be sent across the peer to peer network. ,

Request and response protocol

One consideration for the peer to peer network is to avoid spam and prevent transaction propagation from exhausting the bandwidth capacity of nodes on the network.

Naive broadcast. Let’s assume a node will receive the same transaction from all its connected peers. If a node maintains 60 connections and a transaction is 500 bytes, then just to receive a single transaction from all connections will consume around 30kb in bandwidth. If there are around 1 million transactions per day (Ethereum already achieves it), then a node will consume 30gb in bandwidth just to propagate transactions.

Smarter broadcast. Clearly, to avoid wasting resources, a node should not receive the same information from a connection if it already has a copy of it. This has led to the peer to peer network adopting a request and response protocol.

Inventory. A peer informs the node it has a copy of the transaction.

Request. The node can request the peer to send the full data.

Transaction. The peer sends the node a copy of the transaction.

A transaction hash is only 32 bytes and it is efficient to flood across the network. The hash allows a node to decide whether it should request the additional data or if they have already received it. We can call it a “hint” that helps a node make the right decision.

Homework: What is the total bandwidth cost if a node has 60 connection sand has to receive up to 1 million transaction hashes a day. How does this compare with the naive broadcast approach?

Memory pool

All nodes have a database called the memory pool. It holds a list of pending and valid transactions that are waiting to get confirmed in the blockchain. It serves a few purposes:

Avoid re-downloading transactions. A node will only receive a copy of the transaction once and it avoids the bandwidth issues of re-downloading the same transaction multiple times.

Speed up block propagation. Given some additional information, a node can use its memory pool to re-construct most of a new block.

Back in the day (Bitcoin 2010), it was possible for merchants to rely on 0-confirmation transactions. Generally, a merchant could wait for 10 seconds, and if no double-spend transactions were detected, then it was safe to accept the pending transaction in the memory pool as final. This was possible for two reasons:

Relatively synced. All peers on the network roughly hold the same set of pending transactions.

First-seen rule. A peer will only keep a copy of the transaction it saw first on the network.

Of course, today it is considered a crazy idea. A transaction can be replaced in the memory pool by simply increasing its fee. The 0-confirmation approach was only popular because of the long confirmation times in Bitcoin (~10 minutes per block) whereas the idea was not popular for Ethereum as the announcement of a new block was relatively quick (12 seconds per block).

Type of Nodes

As you can see in Peter McCormack’s tweet, the terminology surrounding different type of nodes is confusing and very often difficult to compare. The reason is simple, node software is a technical detail that most ‘normies’ should never have to think about to use the network. Unfortunately, elements of the Bitcoin community often peddles fake news about Ethereum node software.

In the following, we will try to dispel some of the mystery surrounding node software by understanding:

Node software purpose. What is the desired use-case and capabilities of the node?

Synchronisation approach. How does a node compute a copy of the database using the blockchain?

For example, a node that is destined to be the backbone of a block explorer (Archival node) is not appropriate for a home-user who wants a copy of the database (Pruned node).

Node Purpose

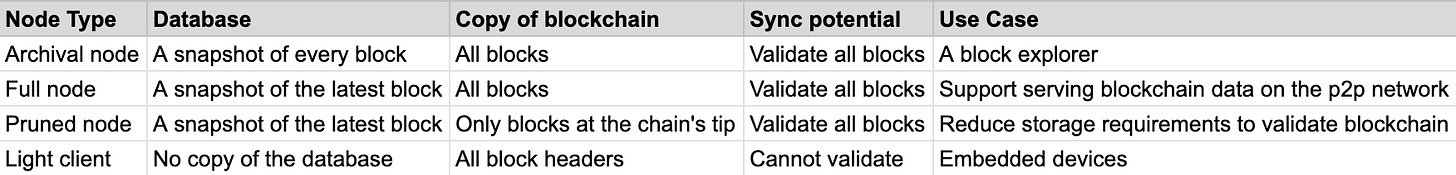

Table 1 highlights four popular type of nodes implemented by the community. A lot of the fake news focuses on archival, full and pruned node. This is because most observers don’t really understand their purpose or desired use case.

For example, a node that is destined to be the backbone of a block explorer (Archival node) is not appropriate for a home-user who wants a copy of the database (Pruned node). Furthermore, if you are user who wants to altruistically support the peer to peer network and service copies of the blockchain to others, then you will want to run a full node.

This is why the Peter McCormack tweet is fake news. It is not fair to compare the storage requirements of an archival node, which focuses on quick retrieval of all historical data, and a full node which is designed to support the capabilities of the peer to peer network. The debacle led to a wonderful article by Bitmex Research on node types which I recommend reading and eventually Peter McCormack retracted his statement.

Finally, the goal for a light client is not to keep a copy of the blockchain and it cannot naively validate the blockchain’s integrity by replaying all transactions. Most light clients validate the leader election (proof of work) to find the longest and heaviest chain. With the block headers, it is possible for others to convince a light client about the state of the blockchain and database. As we will see in Lecture 7, the bridge contracts implemented by rollups, are effectively light clients.

Full Synchronisation Approach

The goal of synchronisation is for a node to take a copy of the blockchain that is fetched from the network and independently compute a copy of the database.

However, it is not as simple as just re-computing every transaction. There are a few aspects to consider:

Block’s position. The node should check that a block is in the correct position before processing it.

Leader election proof. The node should check the block is authorised to be part of the longest chain (for example, check the attached proof of work).

Validate every transaction. The node should check a transaction is valid by checking its replay protection and transaction fee.

Update database. The node can execute the transaction and apply the update to its database.

There are other implementation-specific checks like verifying the state commitment for a block, But generally speaking, we call this the full synchronisation approach, or put another way the naive transaction replay approach, as the node is processing every individual block/transaction.

Downloading the blockchain.

A node is expected to fetch the blockchain from full (or archival) nodes on the peer to peer network.

In the early days of Bitcoin, nodes implemented a block-first approach. They download a block, process it, and then download the next block. But there are two problems with this approach:

Finding the heaviest chain takes a long time. Nodes will not know they are on the longest and heaviest chain until it is fully synchronised.

Adversary can temporarily trick nodes. An attacker can send the node alternative forks of the blockchain and the node will process each block in turn. Eventually, it’ll find a longer chain and move onto it.

To overcome the above issues, nodes have implemented a headers-first approach. It is similar to light clients in the sense that a node finds the chain of leader elections (proof of work) first. Nodes then go a step further by downloading the block data and performing a full synchronisation to check that all blocks are valid.

The headers-first approach is bandwidth efficient. For example, a block header in Bitcoin is 80 bytes and it costs 57.9 to fetch all block headers (for ~723,778 blocks). It is nearly impossible for an adversary to trick the node temporarily as the leader election / proof of work is too difficult to perform at the chain’s tip.

Mixing SPV and Full Synchronisation.

In Table 1, we have a column for sync potential and not necessarily the sync approach implemented for the popular node type. This may seem strange at first, but while an archival/full/pruned node have the potential to perform full synchronisation, there is no guarantee they do as it is very slow to perform.

Some node implementations like go-ethereum offer an alternative approach called fast-sync and snap-sync. It can be summarised as the following:

Fetch a copy of the database. The node retrieves a copy of the entire database (not blockchain) for a specific block height. It contains all account balances, smart contract, state, etc.

Headers-first sync. The node downloads all block headers and randomly checks a small sample of the block’s proof of work.

Database matches state commitment. All block headers (in Ethereum) have a commitment to the entire state of the database. The node will check its copy of the database with the state commitment of a specific block.

Full synchronisation from this point onwards. The node will validate all transactions and blocks from the snapshot block onwards. It will not process all historical transactions and blocks.

In a way, it is mixing the approach of SPV validation which finds the longest chain via checking the proof of work and full synchronisation as it can check the validity of all future blocks. The random sampling of previous blocks helps the node to gain confidence that the peer (who is providing the block headers to the node)) has not tampered with the chain of proof of work.

Ultimately, the node is trusting the block producers for the validity of historical blocks, but not future blocks. If you would like to learn more about it, check out its documentation.

Fun project: Implement a zero knowledge proof of historical Ethereum blocks -- so there is no need to naively replay all transactions. It can be used with snap-sync to help nodes quickly catch up to the latest block.